Auditory Memories in a Model System

Environmental sounds and animal vocalizations mostly occupy a subset of all physically plausible sounds. The combination of the properties of the sound emitter and effects of the environment determine what we call the statistics of natural sounds. These statistics determine the sound features that can carry information in communication signals.

The auditory system that mediates rhythm, pitch and timbre must therefore be sensitive to particular classes of sound features that are organized along dimensions that are closely related to the major perceptual dimensions and the dimensions that characterize the statistics of natural sounds. Such a neural representation must be the result of computations along the auditory processing stream because it is absent at the auditory periphery where the sound pressure waveform is decomposed into frequency channels by the inner ear. This decomposition results in an efficient representation for transmitting a reliable copy of the sound pressure waveform but, by itself, does not provide any of the synthesis required for extracting the information bearing features in the complex sounds

.

The major hypotheses that we are investigating can therefore be framed around a central idea: the three separate dimensional spaces characterizing the statistics of natural sounds, the major auditory perceptual features and the neural representation at the higher levels in the auditory system are related in the strong sense of being similar and interdependent.

.

The major hypotheses that we are investigating can therefore be framed around a central idea: the three separate dimensional spaces characterizing the statistics of natural sounds, the major auditory perceptual features and the neural representation at the higher levels in the auditory system are related in the strong sense of being similar and interdependent.

Neural representations of complex sounds

|

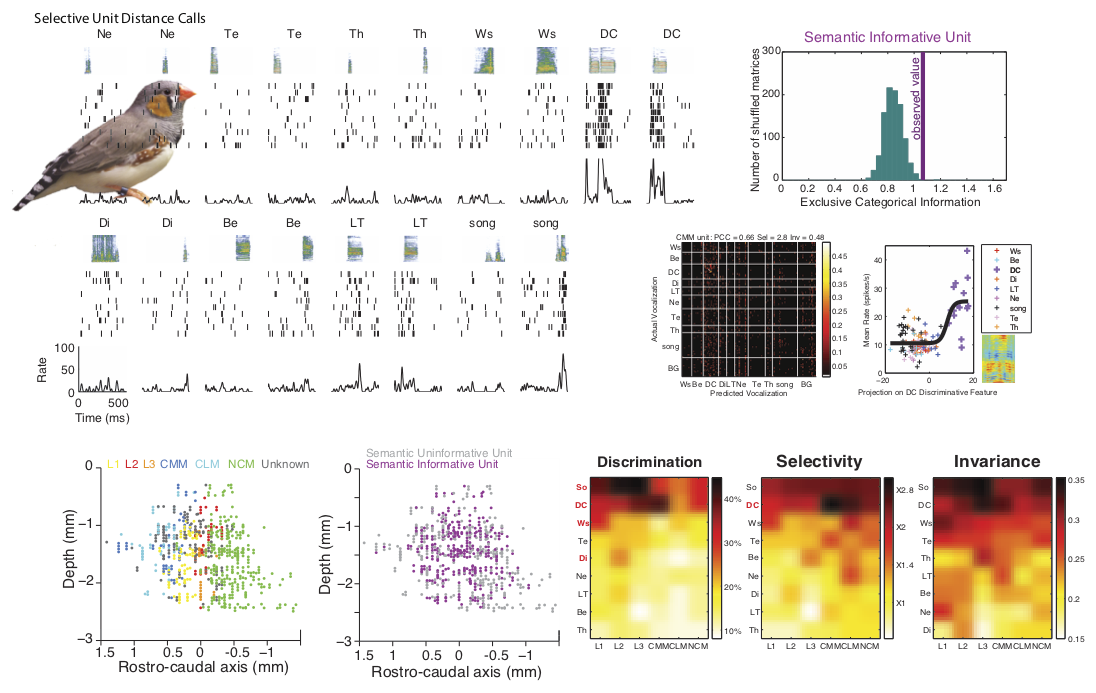

Our neurophysiological studies are currently carried out in songbirds. We have chosen this avian animal model for two reasons. First, songbirds use a rich repertoire of communication calls in complex social behaviors, such as territorial defense and long-term pair bonding. Second, songbirds are one of the few families of vertebrates that are capable of vocal learning. In part because of this unique trait, birdsong, the neuroethological study of vocal learning in songbirds, has been a very active and productive area of research. For our research goals, the rich vocal repertoire of zebra finches allows us to investigate how behaviorally relevant complex communication sounds are represented in the auditory system. In addition, we can also study how the auditory system and the vocal system interact; more specifically, we can examine the input to the song system from auditory areas when the bird hears his tutor’s song or its own vocalizations.

|

|

In recent years, we have made significant progress in deciphering the neural representation for complex sounds in the higher auditory areas of songbirds. To do so, we have estimated the stimulus-response function of a large number of neurons in the auditory midbrain and in the primary and secondary avian auditory cortex. In most of our studies the stimulus-response function has been described in terms of the spectro-temporal receptive field (STRF), the linear gain between the sound stimulus in its spectrographic representation and the time varying mean firing rate of a particular neuron. In the avian auditory cortex, we found that, based on their STRFs, neurons could be classified into three large functional groups (narrowband, wideband and broadband) as well as two other smaller groups (offset and hybrid) . We showed how these distinct functional classes encode features of sounds that are essential for different percepts(Woolley et al., J. Neurosci. 2009). |

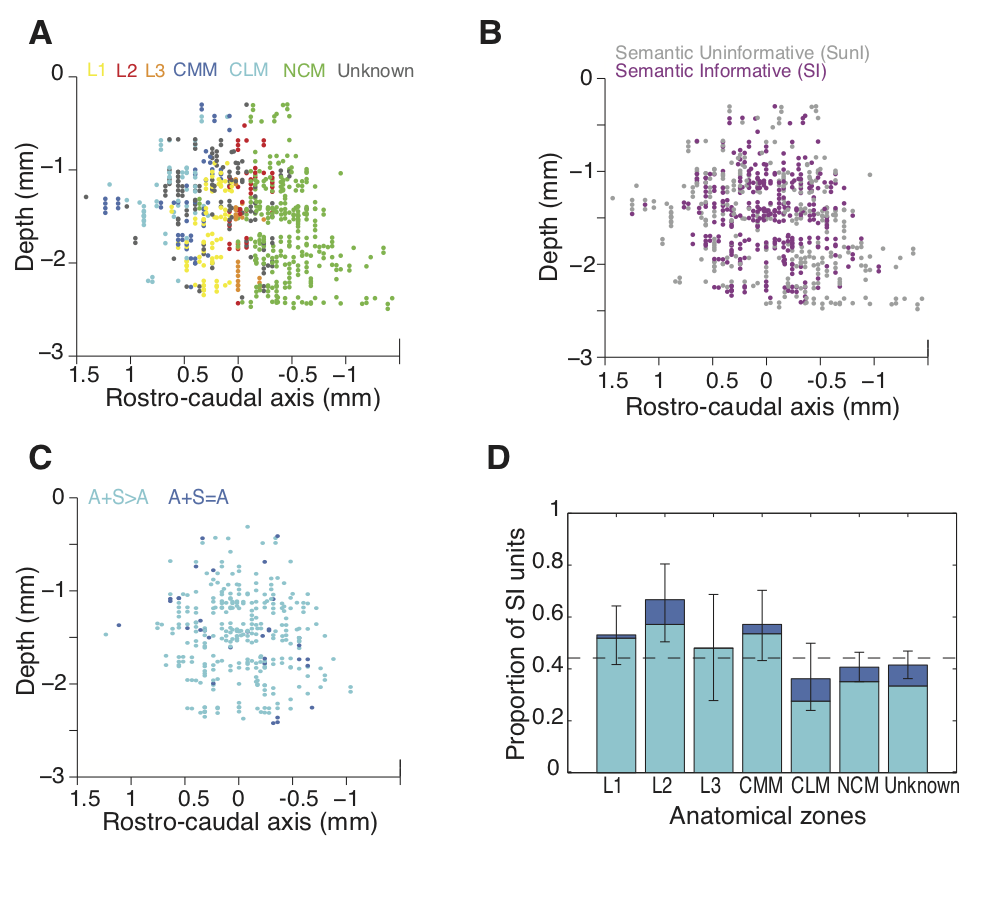

We have also examined the coding of natural sounds at different levels of auditory processing. We have recorded and analyzed the neural activity in the auditory midbrain (area MLd – analogous to the mammalian inferior colliculus), in the primary auditory pallium (area Field L – analogous to primary auditory cortical areas A1 and AAF), and, in a secondary auditory cortical area that has been implicated in the processing of familiar conspecific vocalizations (area CM ). We found clear evidence for hierarchical processing. STRFs in Field L make a more heterogeneous set and are more complex than in MLd (Woolley et al., J. Neurosci. , 2009). At the next level of processing in CM, the neural responses should further complexity and the simple STRF description fails to describe the stimulus-response function (Gill et al, J. Neurophys. 2008). On one hand, this analysis revealed to us how neural representations in higher levels could be obtained by step-wise computations. On the other hand, a simple feed-forward model did not explain all the observed differences in these inter-connected areas. Neurons in MLd appeared to be particular sensitive to the temporal structure in the sound (more so than higher level neurons) and this sensitivity increased while processing conspecific vocalizations versus matched noise (Woolley et al, J. Neurosci. 2006). In CM, neurons were selective to complex sound features in an unusual way. In lower areas, feature selective neurons increase their firing rates as the intensity (or contrast) of the feature increased. But in CM, neurons increased their firing rates proportionally to the degree with which this intensity is surprising given the past stimulus (Gill et al, J. Neurophys. 2008). We believe that this neural representation for detecting unexpected complex features is a result of experience with behaviorally relevant sounds and yields an efficient code for incorporating new auditory memories. In summary, while comparing the neural coding at three levels of the auditory processing stream that are known to feed into each other (MLd to Field L and Field L to CM), we found evidence for hierarchical processing where we can identify intermediate steps towards building neurons that are sensitive for distinct subsets of complex acoustical features. These subsets tile a region of the acoustical space that is highly informative when processing natural sounds, with each subset extracting invariant features that are important for different percepts. But we also found evidence for unique coding properties in each area, suggesting that parallel channels with specialized functions are already in place starting at the level of the auditory midbrain.